Web scraping or data extraction from internet sites can be done with various tools and methods. The more complex sites to scrape are always the ones that look for suspicious behaviors and non-human patterns. Therefore, the tools we use for scraping must simulate human behavior as much as possible.

The tools that are developed and can simulate human behavior are testing tools, and one of the most commonly used and well-known tools as such is Selenium.

In our previous blog post in this series we talked about puppeteer and how it is used for web scraping. In this article we will focus on another library called Selenium.

- To align with terms, web scraping, also known as web harvesting, or web data extraction is data scraping used for data extraction from websites. The web scraping script may access the url directly using HTTP requests or through simulating a web browser. The second approach is exactly how selenium works – it simulates a web browser.

- How to web-scrape multiple page with Selenium (Python) Ask Question Asked 1 year, 11 months ago. Active 1 year, 9 months ago. Viewed 7k times 3. I've seen several solutions to scrape multiple pages from a website, but couldn't make it work on my code. At the moment, I have this code, that is working to scrape the first page.

The simplest way to scrape these kinds of websites is by using an automated web browser, such as a selenium webdriver, which can be controlled by several languages, including Python. Selenium is a framework designed to automate tests for your web application. Selenium was originally developed as a driver to test web applications, but it has since become a great tool for getting data from web sites. Since it can automate a browser, Selenium lets you forego some honeypot traps that many scraping scripts run into on high-value websites. Using Selenium is not the best method to scrap data always. It totally depends on the webpage and amount of data to be scraped. For instance, In the above example where we scrape products, Selenium is not the best method for that.

What is Selenium?

Selenium is a framework for web testing that allows simulating various browsers and was initially made for testing front-end components and websites. As you can probably guess, whatever one would like to test, another would like to scrape. And in the case of Selenium, this is a perfect library for scraping. Or is it?

A Brief History

Selenium was first developed by Jason Huggins in 2004 as an internal tool for a company he worked for. Since then it has been evolved a lot but the concept has remained the same: a framework that simulates (or in truth really operates as) a web browser.

How does selenium work?

Basically, Selenium is a library that can control an automated version of Google Chrome, Firefox, Safari, Vivaldi, etc. You can use it in an automated process and imitate a normal user’s behavior. If for example, you would like to check your competitor’s top 10 ranking products daily, you would write a piece of code that will open a Chrome window (automatically, using Selenium), surf to your competitor’s storefront or search results on Amazon and scrape the data of their leading products.

Selenium is composed of 5 components that make the testing (or scraping) process possible:

Selenium IDE:

This is a real IDE for testing. This is actually a Chrome Extension or a Firefox Add on, and allows recording, editing and debugging tests. This component is less functional for scraping since usually scraping would be done using an API.

Selenium Client API:

This is the API that allows us to communicate with selenium using an API. There are various libraries for different programming languages so scraping can be done in JavaScript, Java, C#, R, Python, and Ruby. It is continuously supported and improved by a strong community.

Selenium WebDriver:

This is the component of Selenium that actually plays the browser’s part in the scraping. The driver is just like a remote control that connects to a specific browser (like in anything else, each remote control is designed to control a specific browser), and through it, we can control the browser and tell it what to do.

Selenium Grid:

This is not a part of selenium itself, but more of a tool to allow us to use multiple selenium instances on remote machines.

Since our previous article talked about Puppeteer and praised it for being the best tool for web scraping, let’s examine the differences and similarities between Selenium and Puppeteer.

What is the difference between Selenium and Puppeteer?

This is a very common question and the distinction is very important. Both of these libraries are very similar in concept, but there are a few key points to consider: Puppeteer’s main disadvantage is that it is limited to be used in Javascript, since the API Google publish supports Javascript only. Since it is a library written by Google, it supports only the Chrome browser. If you prefer to write all of your code in a coding language different than Javascript, or it is of importance to your company to use a web browser other than Google Chrome, I would consider using Selenium.

For scraping purposes, the fact that Puppeteer supports only Chrome really does not matter in my opinion. It matters more for testing usages when you would want to test your app or website on different browsers.

The library limitations and the fact that you need to know JavaScript in order to use it might be a bit more limiting, though I believe it’s always good to learn new skills and programming languages and controlling Puppeteer with JavaScript code is a great place to start with.

Should I choose Puppeteer or Selenium for web scraping?

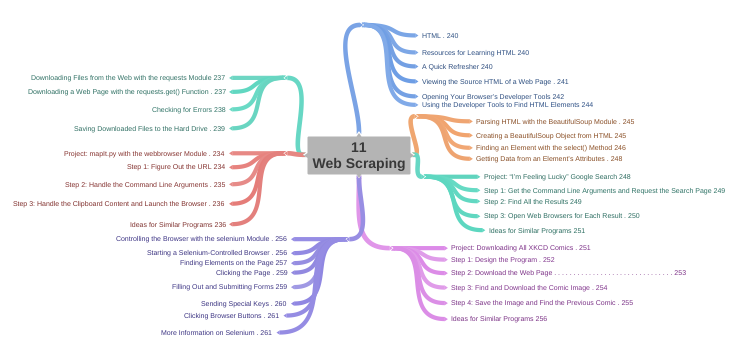

Web Scraping With Selenium And Beautifulsoup

The advantages of Puppeteer over Selenium are immense. And on top of stands the fact that Puppeteer is faster than Selenium. If you are planning on a high scale scraping operation, that may be a point to consider.

In addition, Puppeteer has more options that are vital for scraping, such as network interception. This is another great advantage over Selenium that allows you to handle each network request and response that is generated while loading a page and log them, stop them, or generate more of them.

Using Selenium To Web Scrape

This allows you to intercept and stop requesting certain resources such as images, CSS, or javascript files and reduce your response time and traffic used, both are very important when scraping on a large scale.

If you are planning on scraping for business purposes, either if to build a comparison website, a business intelligence tool, or any other business-oriented purpose, we would suggest you to use an API solution.

To sum it up, here are the main pros and cons of Selenium and puppeteer for web scraping.

Pros of selenium for web scraping:

Works with many programming languages.

It can be used with many different browsers and platforms.

Can manually record tests/small scrape operations.

It is powered by a great community.

Cons of selenium for web scraping:

Slower than Puppeteer.

Command conquer generals for mac. It has less control over the way the scraping is done and has less advanced features.

Pros of puppeteer for web scraping:

Faster than other libraries.

It is easier to use – no need of installing any external driver.

It has more control, allows more options like Network Interception.

Cons of puppeteer for web scraping:

The main drawback of puppeteers is that it currently works only with JavaScript.

To conclude, since most of the advantages of Selenium over Puppeteer are mainly centered around testing, for scraping I would definitely recommend giving Puppeteer a try. Even at the price of learning JavaScript.

I know Puppeteer isn’t for everyone and you might be already into Selenium, want to write a python scraper or just don’t like Puppeteer for any reason. That’s ok and in this case let’s see how to use Selenium for web scraping.

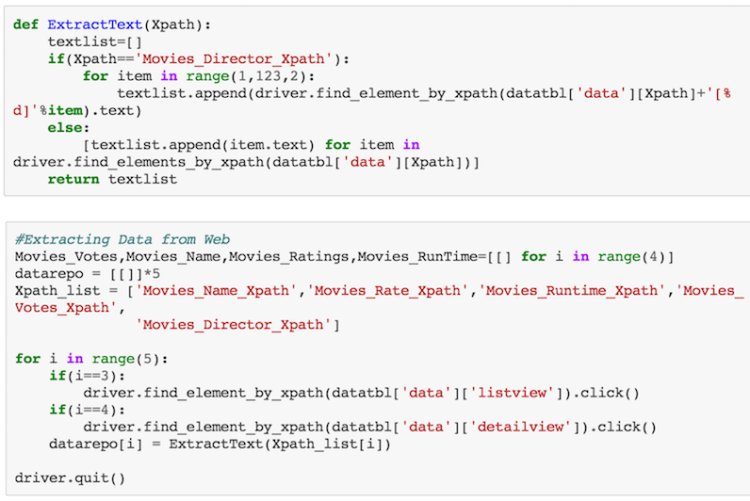

How is Selenium used for web scraping?

Web scraping using Selenium is rather straightforward. I don’t want to go into a specific language (We will add more language-specific tutorials in the future), but the steps are very similar in every language. Feel free to use the official Selenium Documentation for in-depth details. The first and most important step is to install the Selenium web driver component.

Install Selenium in Javascript:

npm install selenium-webdriver

Install selenium in Python:

pip install selenium

Then you can start using the library according to the documentation.

3 Best practices for web scraping with Selenium

Scraping with Selenium is rather straight forwards. First, you need to get the HTML of the div, component or page you are scraping. This is done by navigating to that page using the web driver and then using a selector to extract the data you need. There are 3 key points you should notice though:

1. Use a good proxy server with IP rotation

This is the pitfall you can most easily fall to in case you are a programmer who is starting his scraping journey – If you do not use a good web proxy service you will get blocked. All modern sites including Google, Amazon, Airbnb, eBay, and many others use advanced anti-scraping mechanisms. Some put more effort into it than others, but all of them will start blocking you very quickly if you don’t use a proxy service and change your address every X amount of requests. What is X? depends on the website, but it varies between 5 and 20 usually.

Once you have your proxy in place and have a rotation mechanism for the IPs you use, the number of times you will be blocked by websites will be reduced to around zero.

2. Find the sweet spot in your crawling rate

Crawling too fast is a big problem and will cause you to hit a lot of anti scrape defenses on the websites you are scraping. Of course, you want this to be as fast as possible, but scraping too fast is also sometimes painfully slow.

Web Scraping With Selenium Vba

Try to play with it. Start with a request per second and increase this. make sure that for this test you are using a different IP address every time and that you are trying to fetch different objects from the website you are testing.

3. Use user-agent rotation

When sending a request for a webpage or an object, the browser sends a header called User-Agent. It is necessary to change this every few requests, and always send a “legitimate” User-Agent (one that conceals the fact that this is a headless browser). Read more about User-Agents.

Web Scrape With Selenium

In the next posts we will continue to discuss the challenges you may face when scraping online information and alternatives. You are also more than welcome to check our recent article about the differences between in house scraping and web scraping API.